As with many startups, Flipdish started out with a single developer rapidly putting together a proof of concept and having the codebase and tech infrastructure grow organically as the business grew. When you have no customers it doesn't make sense to automate every single thing, but as you grow to thousands of customers it can start to make a lot of sense to automate away many of the more repetitive work.

In the past, when a new restaurant signed up with Flipdish they gave all their info (opening hours, menu, delivery zone etc.) to us and our onboarding team entered the data. A year ago we decided to fully automate the onboarding process at Flipdish. This was becoming more and more appealing as we were manually setting up close to 100 customers each month.

Let me tell you about how we used to handle setting up new websites for customers, and how we changed our architecture to allow self-service website creation.

How it used to work

Flipdish websites are generated by a multi-tenant ASP.NET MVC app (codenamed Zeus) hosted on Azure App Services. This is basically a hosted version of IIS with a web-based interface (and API) to configure the web-server.

When we want to add a new website, it's a 2 step process for us. Firstly we set up the website on our own platform, and enter the domain (hostname actually) that we will use into our system. This tells Zeus what content to serve up based on which hostname the request is coming from. For example, if Zeus receives a request with the hostname www.pizzashop1.com it will serve up a different website than it would if it received a request with hostname www.curryshop2.com.

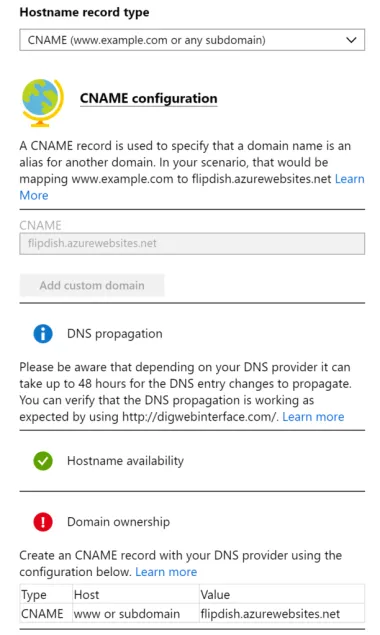

But before these requests can get as far as Zeus, Microsoft requires that we register the hostname (they call it a domain, but really they mean hostname) in IIS the App Service.

Once that's done, we're good to go - requests are safely routed to our web app and it knows what content to serve up based on the request hostname.

So, what's wrong with this?

Firstly, adding a new hostname to an Azure website causes it to reload its config or entirely restart the web-server, which effectively causes a minute or two of downtime. This massively impacts when we can actually set new sites live. And as we start to work with more customers in countries with unusual names in faraway time zones (we had new signup from Trinidad and Tobago last week. Yes, both.), this gets more problematic.

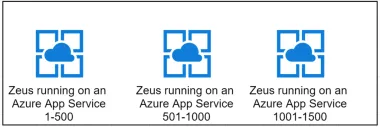

Secondly, when we hit 500 hostnames we got an annoying "Compooter says no" message when trying to add another. We had reached a limit on Azure Web Apps and needed to spin up another instance to allow us to continue. Running multiple instances of IIS/Azure App Services isn't ideal as each instance has a memory overhead and also adds complexity to our CI/CD pipeline. We now need to deploy to three instances in parallel instead of one.

We set our sights on a solution that would meet the following criteria:

- Zero downtime when adding new websites

- Handle 10,000+ websites

- Fully automated, including automated issuing and renewing of SSL certificates.

- Built in redundancy to ensure little or no downtime due to hardware failures

Our options looked like this:

Continue to terminate connections on Azure App Services

This was very much a known entity and a safe option. We could automate addition of new hostnames using Azure's APIs and Powershell scripts. But needing to run and maintain 20+ different apps to work around the 500 hostnames per app limit wasn't ideal, and the downtime when adding each new hostname was a show stopper. It felt like this solution was never going to scale in the way we needed.

Terminate on a self-hosted IIS instance

IIS proper can handle thousands of certificates and hostnames with ease. This was a legitimate option, but moving to a self-hosted IIS after years on the cloud seemed like a strange move back into the dark ages, bringing with it a host of maintenance and scalability issues.

Or, we could introduce a proxy that could do some magic with the request which would allow us to route it to Zeus without needing to register a new hostname with Azure for each client.

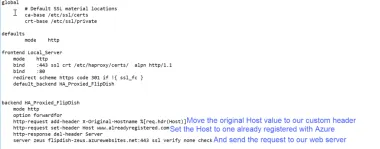

We would use a Layer 7 proxy which could terminate the request (which would involve handling SSL certificated on the proxy) and change the Host value in the header to one already registered with Azure, meaning the request would be delivered to Zeus.

A request looks like this

GET / HTTP/1.1 Host: www.newexamplerestaurant.com

and the proxy would change it to

GET / HTTP/1.1 Host: www.alreadyregistered.com X-Original-Hostname: www.newexamplerestaurant.com

Once the request reaches the Azure App Service, it believes the request was made to www.alreadyregistered.com and handles it accordingly. And we make a change to our Zeus app to check for the existence of the X-Original-Hostname header, and if it exists, to go ahead and use it to work out what content to serve.

So, the hunt for a suitable proxy began and we investigated the following.

Terminate on Azure Application Gateway

Azure Application Gateway is a Layer 7 gateway and could rewrite the request as we needed. It was hosted which meant that we wouldn't need to worry about keeping VMs running.

But it turned out that Azure Application Gateway has even lower limits on the number of hostnames and SSL certificated that can be registered. It only handles up to 100 per gateway, so that would be a step in the wrong direction.

Terminate on an AWS Gateway

We looked at AWS's offerings and found them to have similar limits to Azure's Application Gateway. Those being that we were not going to be able to add 10,000 SSL certificates to a single gateway/proxy.

Terminate on NGINX

NGINX is an awesome web server and proxy bundled into one. Its proxy feature would allow us to terminate the connections, mess with the headers and pass the request on to Zeus, in the same way that we were planning with Azure Application Gateway. We wouldn't need to use the web server aspect of NGINX. Cons here were that we could not find information online as to how many SSL certificates and hostnames NGINX could handle. Also, as we were not going to need to use the web server features of NGINX, we thought that we may be better off using the mother of all proxies instead...HAProxy.

Terminate on HAProxy

HAProxy is the proxy. Most widely used, most well known and best supported. Internet folklore suggested that HAProxy can easily handle 10,000 SSL certificated and hostnames.

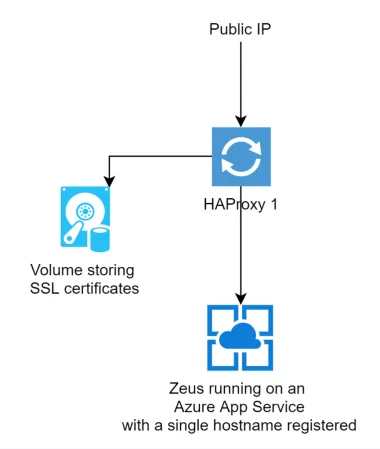

Our initial plan was to have HAProxy living on a VM and configured as a Layer7 proxy, where it would host the SSL certificates and terminate the connections. It would rewrite the headers and allow Zeus know what website it should be serving up, as described above.

Our HAProxy configuration looks a little like this:

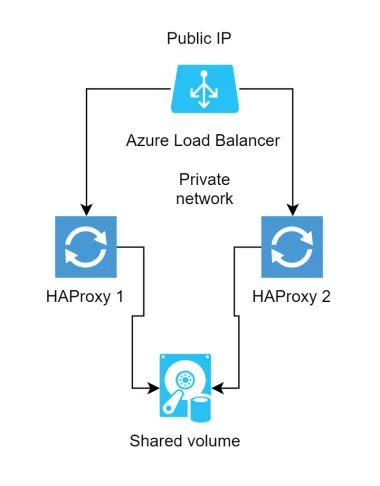

And overall architecture like this:

This looked good but didn't meet two of the criteria that we had set out to meet. We would have downtime when adding new certificated as this required a restart of HAProxy, which is ultra fast under normal circumstances, but takes 20 seconds or so when it needs to load up 10,000 SSL certificates. It was also not a resilient solution. If the VM hosting the single HAProxy instance went down we would be in trouble.

This lead us to a more complex solution but one that met all of our needs.

We would instead use two instances of HAProxy, each hosted on a separate VM. The VMs would read the SSL certificates from a shared virtual hard drive (which come with a 99.999% availability SLA). When we needed to add a new certificate, we would divert traffic to HAProxy1, restart HAProxy2, divert traffic to HAProxy2, restart HAProxy1 and then return to sending traffic to both HAProxy instances.

This setup allows us to bring new websites and customers online in seconds with zero downtime and in a fully automated manner.

When combining this setup with automated generation of certificates using LetsEncrypt, we can now bring new websites online with their own SSL certificates in under a minute with zero downtime.

If this sounds like the type of challenge you like to take on, we're always on the look out for great developers so drop us a note.

Or take a look at some of the other things we've been working on:

- DIY Online Ordering for Restaurants

- API Integration for Restaurant Orders by Flipdish

- Improved fraud detection released

Interested to learn more about how Flipdish can help you business?